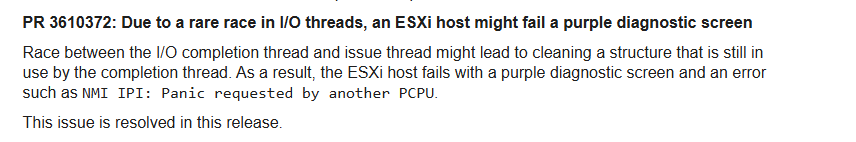

*** Update 26-02-2026 ***

This showed up in “ESXi 8.0 Update 3i Release Notes” that was just released last night :

So there might be a change that this fixes it. My lab host with the realtek driver has been updated now – stay tuned for an update!

*** Original post ***

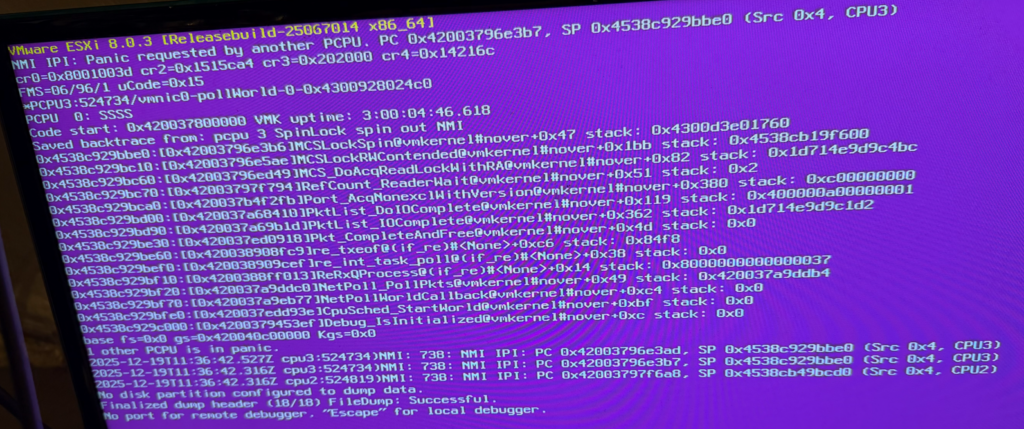

I’m trying to figure out why my Maxtang NUC is experiencing a PSOD every 3–4 days after I started using the Realtek driver fling (there are no PSODs when using the USB fling).

Realtek driver:

VMware-Re-Driver_1.101.00-5vmw.800.1.0.20613240.zip

Does anyone have any clues? If so, please reply in the comments section 🙂