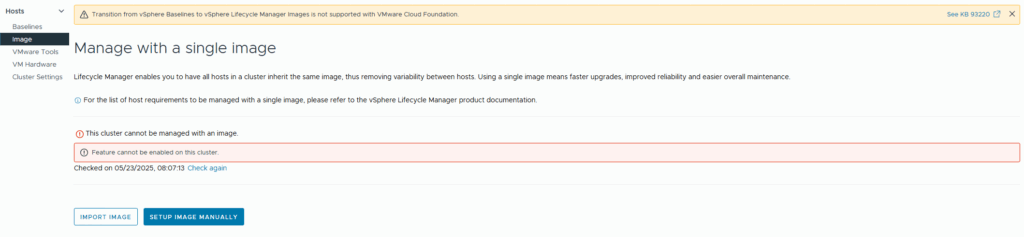

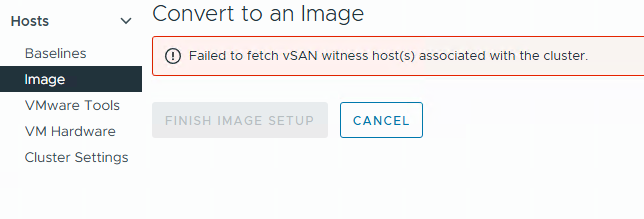

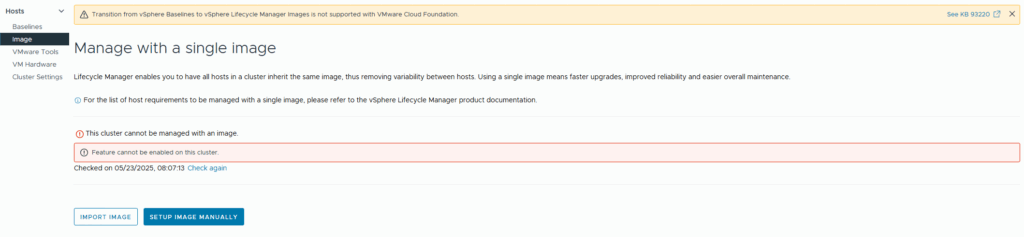

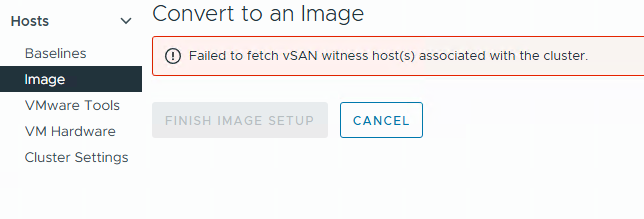

I recently encountered an error in VMware vLCM: “Feature cannot be enabled on this cluster”. This was followed by another message: “Failed to fetch vSAN witness host associated with the cluster.”

I recently encountered an error in VMware vLCM: “Feature cannot be enabled on this cluster”. This was followed by another message: “Failed to fetch vSAN witness host associated with the cluster.”

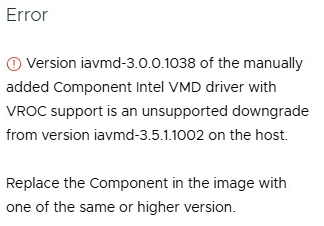

I have recently encountered the following error on several Lenovo VX systems, which prevents vLCM from initiating the upgrade from ESXi 7 to ESXi 8:

Error: Version iavmd-3.0.0.1038 of the manually added Component Intel VMD driver with VROC support is an unsupported downgrade from version iavmd-3.5.1.1002 on the host.

Replace the Component in the image with one of the same or higher version.

If you encounter the error mentioned above while using VMware vLCM in conjunction with the “HPE OneView for vCenter Plugin,” there is a high likelihood that you can resolve the issue by executing a specific command directly on the ESXi host. To do this, you will need to access the ESXi shell or SSH into the host:

sut -set mode=AutoDeploy

This command sets the mode to “Auto Deploy,” which is essential for ensuring proper functionality with VMware vLCM and the HPE OneView integration.

To verify the current mode of your ESXi host, you can retrieve this information by executing the following command:

sut -exportconfig

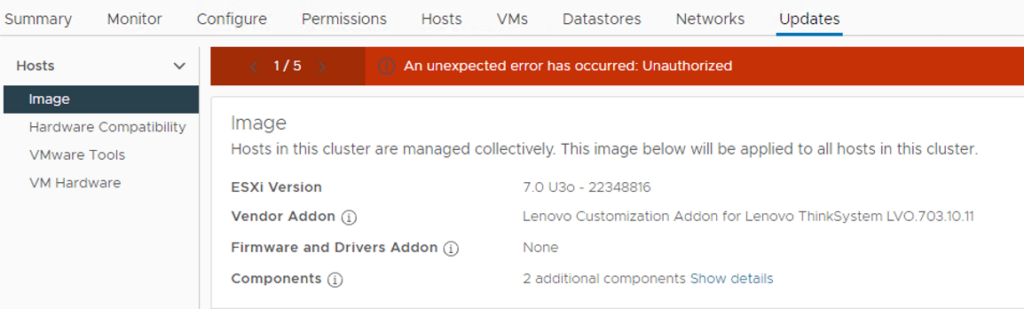

I recently encountered the above error in VMware vLCM while working in a multi-site vCenter environment. The issue was initially identified by a site administrator who had full administrative rights over the datacenter object they managed.

The root cause of this issue lies in the site administrators restricted access. Although they had full permissions for their respective datacenter, they lacked global administrative privileges across the entire vCenter. For vLCM to function correctly, broader access rights are required.

After investigating the vCenter roles and permissions, I was able to identify the minimal privileges needed to resolve the issue without granting excessive access.

The solution:

Continue reading

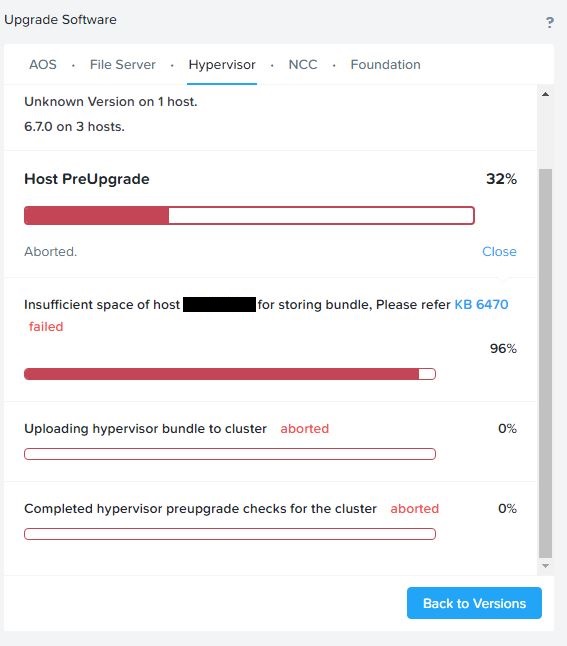

I recently ran into the above problem with a customer while trying to upgrade ESXi from Prism interface. The KB6470 Mentioned that it might be related to the ESXi scratch partition not having enough available space. But that wasn’t the issue here.

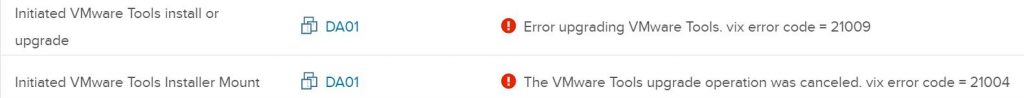

Continue readingAfter I have upgraded my home lab from ESXi 7.0 to 7.0 update 1c (17325551) I ran into an issue updating VMware Tools on my VMs.

I tried both update options (“automatically” and “manually”), but both failed with a VIX error.

Automatilly update output = “vix error code = 21004”

Manually update = “vix error code = 21009”

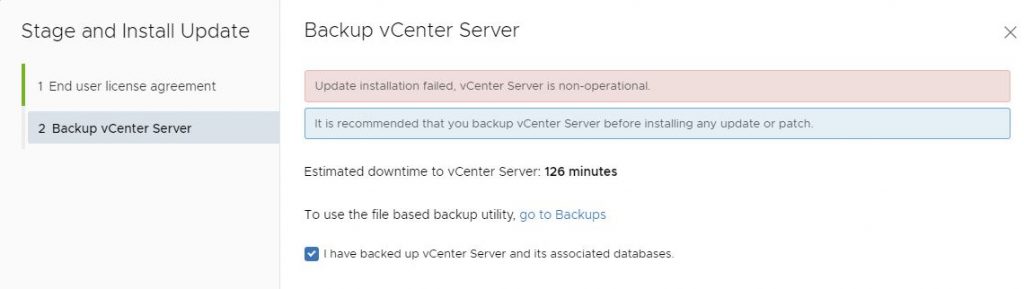

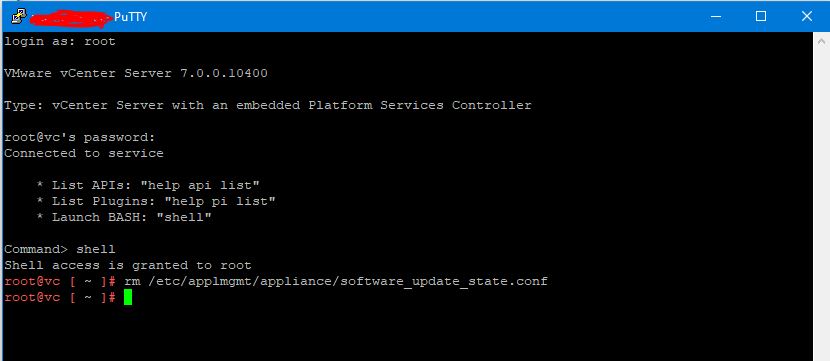

I recently ran in to this error upgrading my homelab vCenter from 7.0.0.10400 to 7.0.10600:

vCenter: update installation failed, vCenter Server is non-operational

Luckily, the fix was easy – all I needed to do was to delete the file “/etc/applmgmt/appliance/software_update_state.conf”

So you just need to SSH to your vCenter and execute this command:

rm /etc/applmgmt/appliance/software_update_state.conf

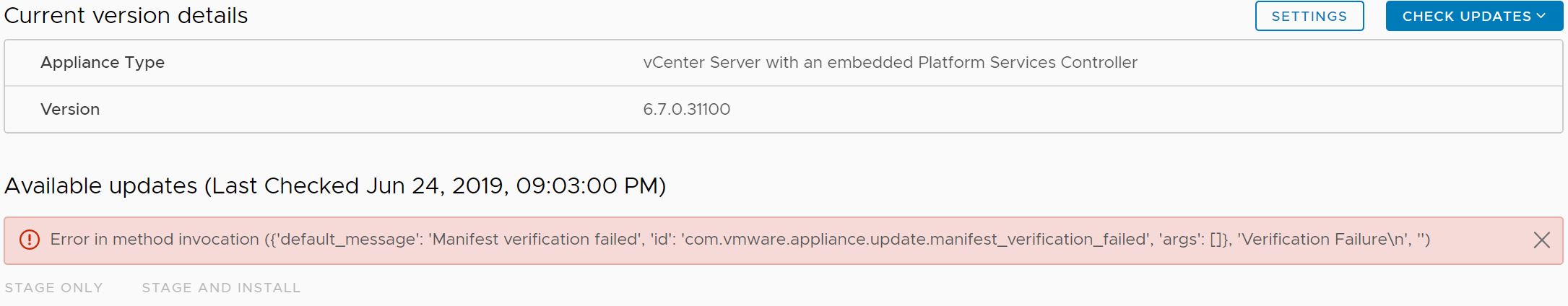

A few days ago, I decided to update my vCenter server to version 6.7 U2c – normally this is an easy task with the update section in the VAMI interface. But this time I just encountered this error message when I tried to search for the update:

Continue readingError in method invocation ({‘default_message’: ‘Manifest verification failed’, ‘id’: ‘com.vmware.appliance.update.manifest_verification_failed’, ‘args’: []}, ‘Verification Failure\n’, ”)

I recently wanted to make sure that my lab environment was on the latest VCSA version (Platform Service Controller and vCenter) so I went to the VAMI interface on my PSC and quickly discovered that there were no updates – that’s was strange because my PSC was at build 8217866 (build 6.7.0.10000) and according to the vSphere version list KB2143838 there has been released some newer versions Continue reading