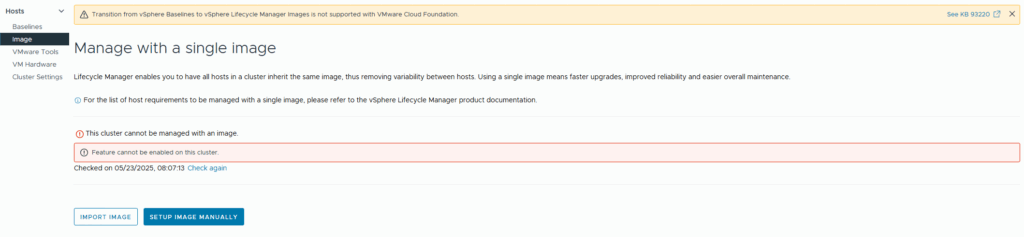

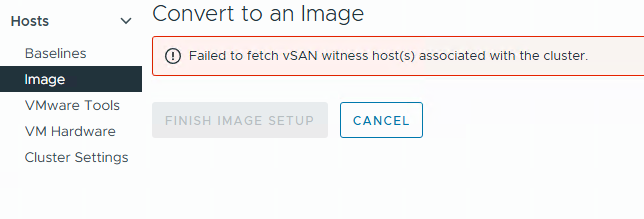

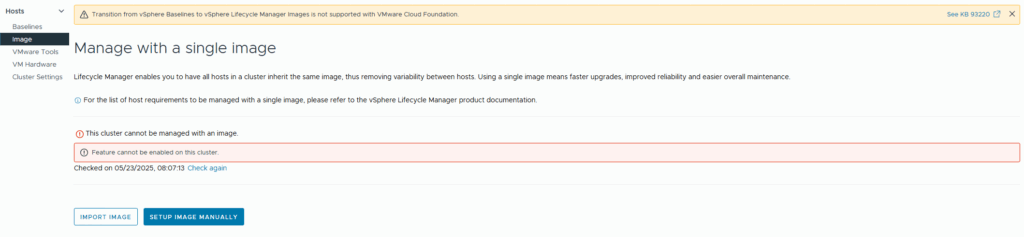

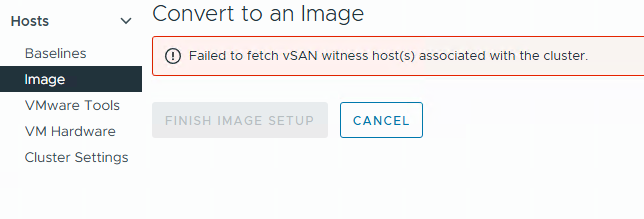

I recently encountered an error in VMware vLCM: “Feature cannot be enabled on this cluster”. This was followed by another message: “Failed to fetch vSAN witness host associated with the cluster.”

I recently encountered an error in VMware vLCM: “Feature cannot be enabled on this cluster”. This was followed by another message: “Failed to fetch vSAN witness host associated with the cluster.”

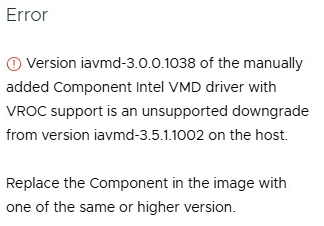

I have recently encountered the following error on several Lenovo VX systems, which prevents vLCM from initiating the upgrade from ESXi 7 to ESXi 8:

Error: Version iavmd-3.0.0.1038 of the manually added Component Intel VMD driver with VROC support is an unsupported downgrade from version iavmd-3.5.1.1002 on the host.

Replace the Component in the image with one of the same or higher version.

If you encounter the error mentioned above while using VMware vLCM in conjunction with the “HPE OneView for vCenter Plugin,” there is a high likelihood that you can resolve the issue by executing a specific command directly on the ESXi host. To do this, you will need to access the ESXi shell or SSH into the host:

sut -set mode=AutoDeploy

This command sets the mode to “Auto Deploy,” which is essential for ensuring proper functionality with VMware vLCM and the HPE OneView integration.

To verify the current mode of your ESXi host, you can retrieve this information by executing the following command:

sut -exportconfig

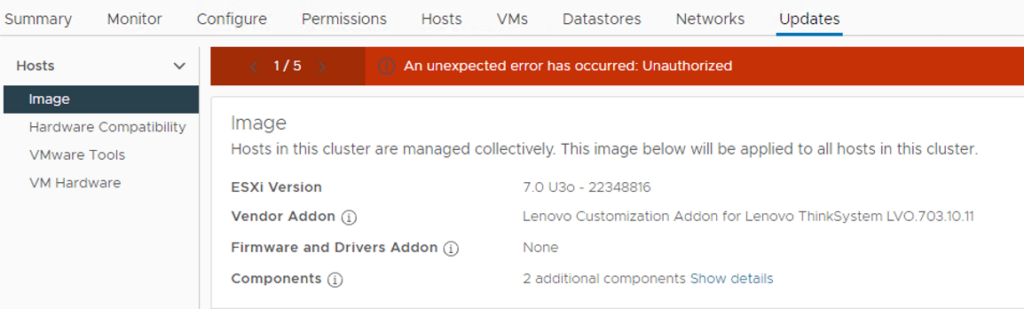

I recently encountered the above error in VMware vLCM while working in a multi-site vCenter environment. The issue was initially identified by a site administrator who had full administrative rights over the datacenter object they managed.

The root cause of this issue lies in the site administrators restricted access. Although they had full permissions for their respective datacenter, they lacked global administrative privileges across the entire vCenter. For vLCM to function correctly, broader access rights are required.

After investigating the vCenter roles and permissions, I was able to identify the minimal privileges needed to resolve the issue without granting excessive access.

The solution:

Continue reading

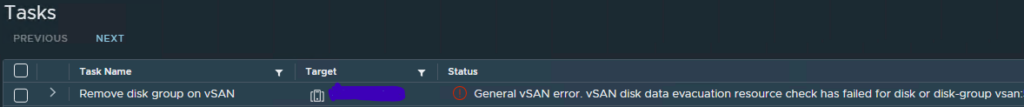

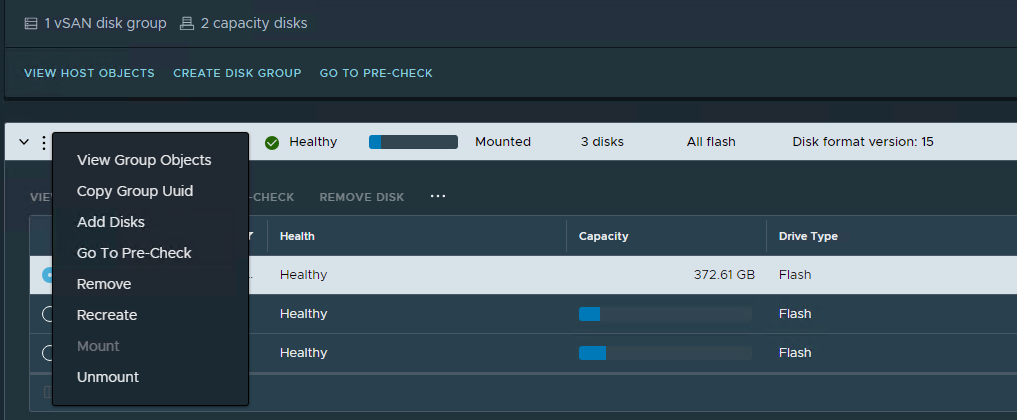

When a vSAN “cache” disk fails and gets replaced you need to rebuild the entire disk group that the cache disk belongs to. The normal way of doing this is to put the host in maintenance mode and then click the three dots next to the disk group and select “remove” (see picture below) – but unfortunately most of the time you get this error when trying to remove the disk group:

General vSAN error. vSAN disk data evacuation resource check has failed for disk or disk-group vsan: <UUID>

Luckily there is an easy way of removing the disk group from ESXi CLI that does seem to work every time (at least for me!). Enable SSH on the host containing the defective disk group. Locate the diskgroup UUID and run the following command (remember to replace <UUID>):

esxcli vsan storage remove --uuid <UUID>If you are unable to find the UUID for the diskgroup then you can run this command:

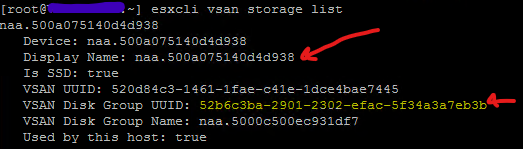

esxcli vsan storage listWhich will give you an output like this:

Important! – if your host has more than one disk group find the correct one by searching for an belonging disk (naa.xxxxxxx – first red arrrow). When a disk in the group that you need to delete is found, note the “vSAN Disk Group UUID” (second arrow) – this will be the UUID you need to delete with “remove” command mentioned earlier!

If you are uncertain what is going on here – then call VMware support. Deleting the wrong diskgroup can have fatal consequences!

For more information see VMware KB2150567 – link

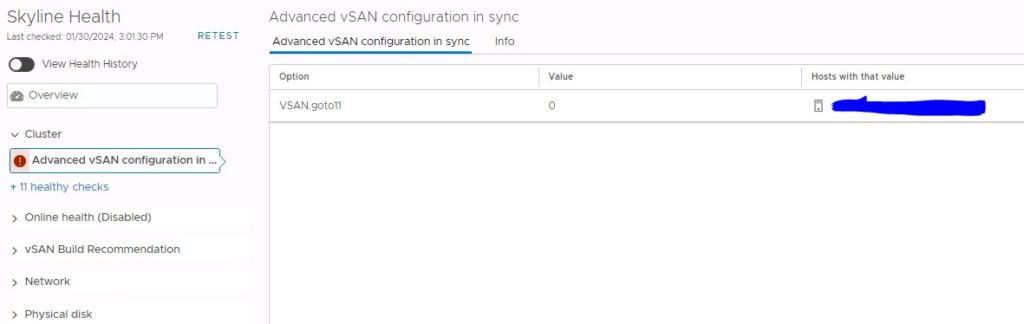

Recently I saw this “error” in Skyline Health on a VSAN running version 7.U3o. The host that had the error was the virtual witness appliance.

Continue readingYou are not very likely to bump into Windows 2003 physical servers anymore – but nevertheless that just happened to me a week ago. The task was clear – this server needs to be virtualized into a vSphere 7 environment, running vSAN.

The problem with this task is that to convert (P2V) a 2003 server you need to install vCenter Converter 6.2 on it, since the latest release 6.3 simply doesn’t work on 2003 servers (It won’t install).

Next problem is that vCenter Converter 6.2 doesn’t work with vSAN 7 – only “traditional storage” can be used as target – but in this case there were no other storage than vSAN that could be used as target.

What to do? – read on…

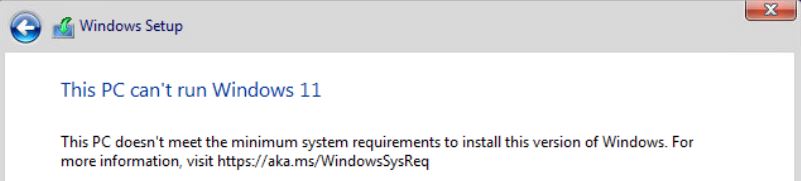

If you run in to the above error when installing Windows 11 as a virtual machine on ESXi (or other virtual platforms) then be aware that there is a workaround.

Continue reading

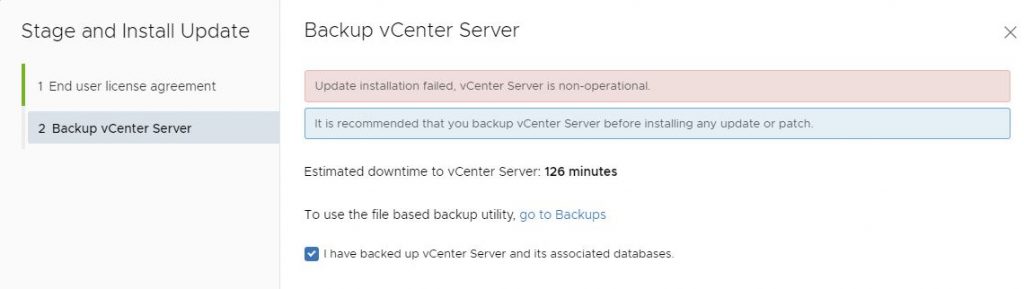

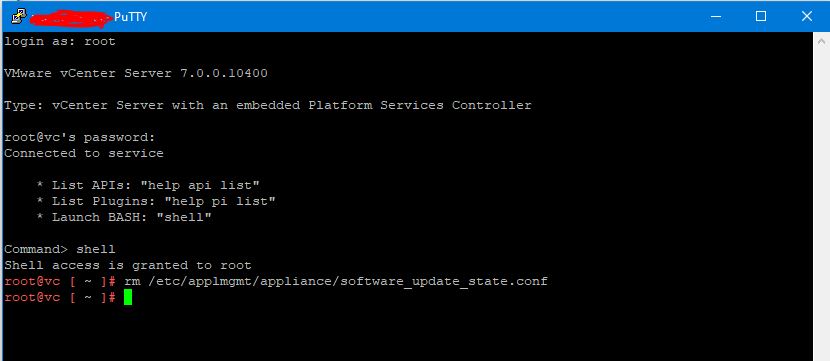

I recently ran in to this error upgrading my homelab vCenter from 7.0.0.10400 to 7.0.10600:

vCenter: update installation failed, vCenter Server is non-operational

Luckily, the fix was easy – all I needed to do was to delete the file “/etc/applmgmt/appliance/software_update_state.conf”

So you just need to SSH to your vCenter and execute this command:

rm /etc/applmgmt/appliance/software_update_state.conf

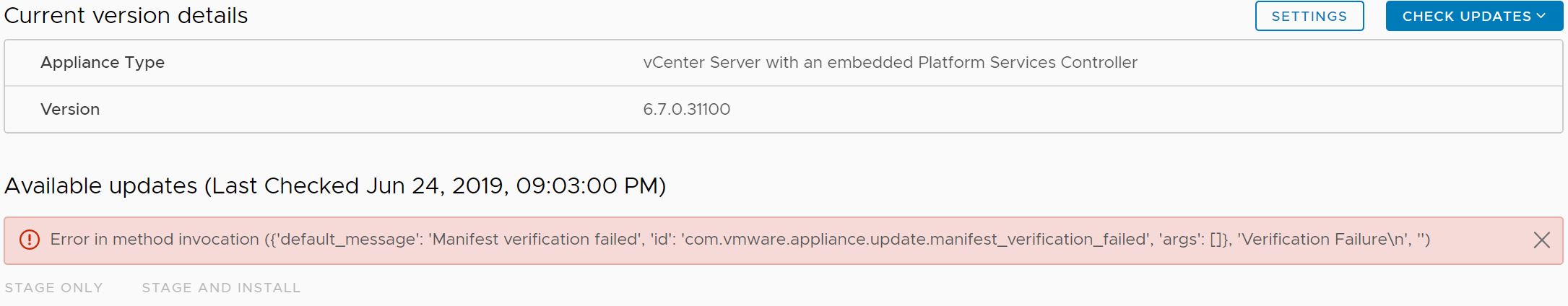

A few days ago, I decided to update my vCenter server to version 6.7 U2c – normally this is an easy task with the update section in the VAMI interface. But this time I just encountered this error message when I tried to search for the update:

Continue readingError in method invocation ({‘default_message’: ‘Manifest verification failed’, ‘id’: ‘com.vmware.appliance.update.manifest_verification_failed’, ‘args’: []}, ‘Verification Failure\n’, ”)